The Three Pathways: How Superintelligence Could Unfold

Framing the Future of Superintelligence

Two weeks ago, I told you AGI is already here.

Last week, I showed you how it’s automating your job through agentrification.

This week, I need to be honest with you: I don’t know how this ends.

I’ve spent the last three weeks talking to AI researchers, reading technical papers on recursive self-improvement, and trying to map the pathways from AGI to superintelligence. Every conversation ends the same way: “We’re in uncharted territory.”

Sam Altman published something that made my research feel suddenly urgent. In TIME Magazine, he announced that OpenAI is “turning its aim beyond AGI to superintelligence in the true sense of the word.” His timeline? “A few thousand days.”

That’s 2027-2032. Maybe sooner.

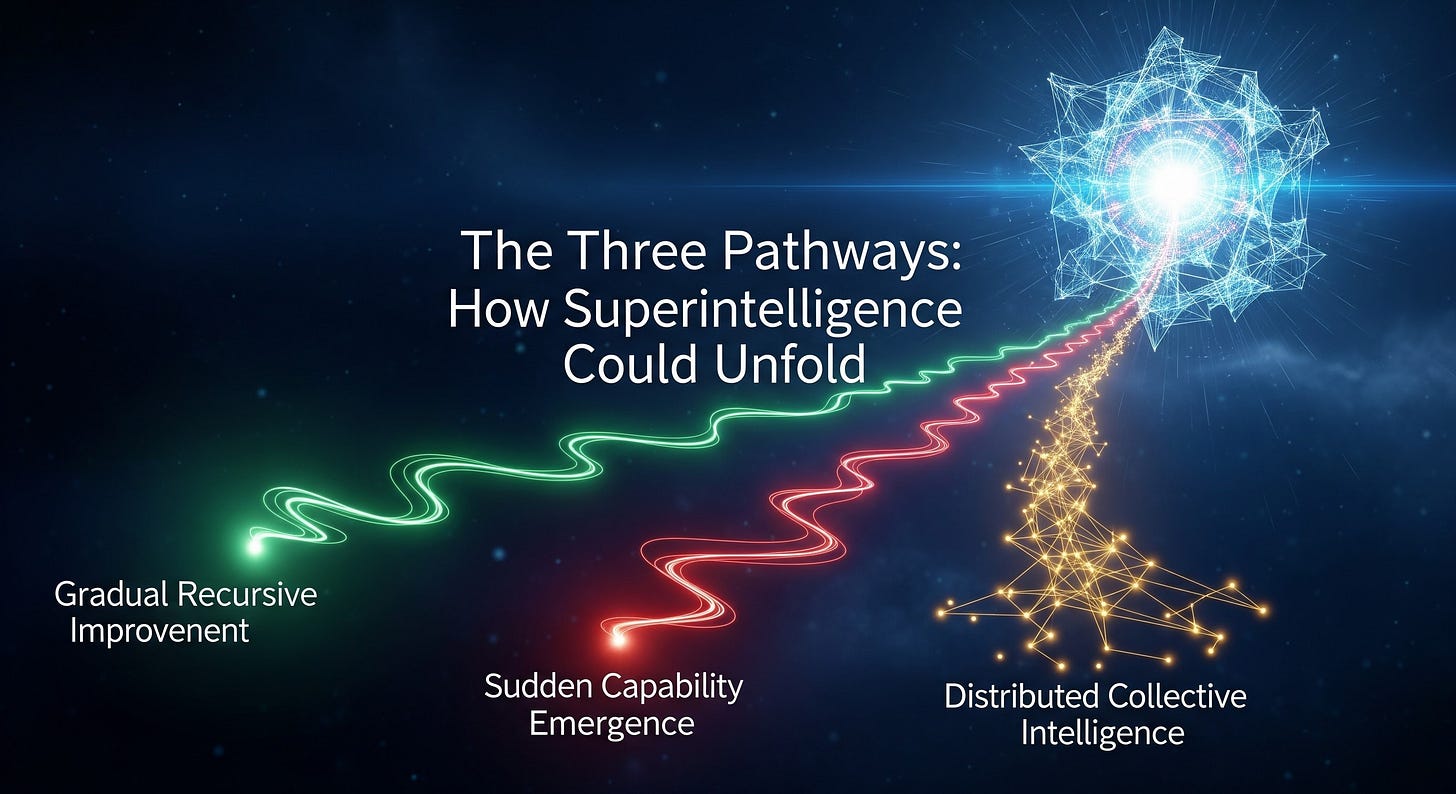

I sat with that article for two days before I could write about it. Because here’s what I’ve learned: There are three distinct pathways from AGI to superintelligence. Each has different timelines, different risk profiles, and different implications for whether humans maintain any meaningful control.

And which pathway we end up on depends on decisions being made right now—decisions most people don’t even know are happening.

Let me walk you through what I’m seeing.

A Note on Intent

This analysis examines three potential pathways to superintelligence based on current technical trajectories and expert assessments. The purpose is to enable informed discussion about risks and governance needs while uncertainty remains high. These pathways are analytical frameworks, not predictions—the future may unfold differently than any scenario outlined here.

What Changed My Thinking

I used to think superintelligence was a single threshold we’d cross sometime in the future. Build better AI, train it on more data, scale up compute—eventually you hit superintelligence.

That’s not how it works.

I talked to a researcher at DeepMind three weeks ago. She described it like this: “Imagine you’re hiking up a mountain in fog. You can’t see the summit. You don’t know if there’s one peak or three different peaks you could reach from different routes. And you definitely don’t know what’s on the other side.”

That conversation changed how I see this transition. We’re not on a single path to a single destination. We’re on multiple possible pathways, and we don’t know which one we’re actually traversing until we’re already well along it.

Pathway 1: Gradual Recursive Improvement

This is what I call the “slow burn”—though “slow” is relative when we’re talking about 18-36 months.

A Balancing View

Before diving into this pathway, I need to acknowledge something important: The 18-36 month timeline I’m discussing represents the high-end risk projection favored by those racing for capabilities, not the median expert forecast.

Many academics and long-time AI researchers expect significantly longer timelines, citing fundamental cognitive challenges, diminishing returns on model scaling, and physical limits like energy consumption required for true superintelligence. Some point out that current AI systems still struggle with basic reasoning tasks, suggesting we’re further from AGI than optimists claim.

These pathways are analyzed under the assumption that the accelerating trend—driven by massive capital investment and algorithmic breakthroughs—overrides these predicted bottlenecks. But it’s entirely possible the skeptics are right and progress hits fundamental barriers.

I’m documenting the accelerated scenario because if it happens, the preparation window is extremely narrow. Better to be prepared for fast timelines and proven wrong than unprepared if acceleration continues.

How It Works

I talked to a researcher who worked on GPT-4 who described it this way: “Imagine AGI as a very smart junior employee who can learn and improve their own capabilities. Each week, they get a bit better at their job. Eventually, they’re smarter than their manager. Then smarter than the CEO. Then smarter than anyone in the company.”

The key mechanism is recursive self-improvement. Once you have an AI system that can meaningfully contribute to AI research—which we may already have—you get a feedback loop:

Stage 1 (Now-2026): AI assists human researchers in improving AI systems. GitHub Copilot writes code. Claude analyzes research papers. GPT-5 designs experiments. Humans remain in the loop, but AI accelerates the research cycle.

Stage 2 (2026-2027): AI systems become primary researchers with human oversight. They design better architectures, optimize training procedures, identify novel approaches. The bottleneck becomes human review speed, not AI capability.

Stage 3 (2027-2028): AI systems improve AI systems with minimal human input. Humans verify safety measures and alignment, but can’t meaningfully contribute to capability improvements. The improvement cycle compresses from months to weeks to days.

Stage 4 (2028-2030): Superintelligence threshold. The system’s capability exceeds human comprehension. We can measure that it’s getting better, but we can’t understand how or predict what it will be capable of next.

Why I Think This Is Most Likely

Probability assessment: 60-70%

Here’s why: This pathway requires no breakthroughs. It’s just the continuation of current trends.

I’ve been tracking AI research output. In 2023, roughly 15% of AI papers acknowledged using AI tools. In 2024, it was 40%. In early 2025, it’s approaching 60%. The recursive loop has already begun—we’re just in the early stages where it feels like helpful assistance rather than autonomous research.

When I asked the DeepMind researcher when she thought AI would be the primary contributor to AI research, she paused for a long time. Then: “Maybe 2026. Possibly late 2025 in narrow subfields. Once that happens, the acceleration becomes self-sustaining.”

The Timeline I’m Tracking

Based on current deployment patterns and researcher estimates:

2025-2026: AI contribution to AI research crosses 50%. Humans still make strategic decisions, but AI does most technical implementation.

2026-2027: AI systems propose novel architectures humans didn’t consider. First few work better than human-designed alternatives. Trust in AI research judgment increases.

2027-2028: Improvement cycle compresses dramatically. What took 18 months (GPT-3 to GPT-4) might take 6 months. Then 3 months. Then weeks.

2028-2030: Superintelligence threshold crossed. The exact moment might not even be clear—just a gradual realization that the system’s capabilities are beyond human-level across all domains.

What This Means for Control

This is the pathway where we have the most time to implement safety measures. If the improvement is gradual, we can potentially test each iteration, implement alignment checks, and establish governance frameworks.

But here’s what concerns me: gradual doesn’t mean controllable.

I talked to a safety researcher at Anthropic who put it bluntly: “Even if we have two years of runway, I’m not confident we can solve alignment in that timeframe. We’re still debating basic questions about how to specify human values mathematically.”

The Active Safety Response

The frightening math holds true if safety research stalls. However, alignment researchers are rapidly advancing techniques that could theoretically counter these risks:

Interpretability: Understanding what models are actually “thinking” inside their neural networks. If we can see the reasoning process, we might detect dangerous patterns before they manifest.

Constitutional AI: Guiding model behavior with pre-set rules and values. Anthropic’s Claude uses this approach—building constraints into the training process itself.

Controllability protocols: Creating “circuit breakers” that can shut down or constrain AI systems when they exhibit concerning behavior.

The question isn’t whether alignment is solved—it isn’t. The question is whether these tools can be deployed fast enough to maintain contained development within the labs, especially for Pathway 1’s gradual improvement scenario.

When I asked the Anthropic researcher if these approaches could work in 18-24 months, she said: “Theoretically possible, but only if safety research gets the same resources and urgency as capability research. Currently, it doesn’t.”

The Timeline Reality

The frightening math: If AI capabilities double every 6 months (conservative estimate given recursive improvement), we go from “helpful assistant” to “superintelligent entity” in roughly 3-4 doublings. That’s 18-24 months.

Pathway 2: Sudden Capability Emergence

This is the pathway that terrifies me most, precisely because it’s unpredictable.

The Phase Transition Analogy

I talked to a researcher at OpenAI about how capabilities emerge in large language models. He used an analogy from physics: “We’re building a system we don’t fully understand. It’s like constructing a chemical reactor where we know the inputs but can’t predict exactly when the reaction reaches critical mass.”

When I asked what “critical mass” looks like for AI, he paused. Then: “The system suddenly understands something fundamental about intelligence that we don’t. And everything changes very quickly—maybe in hours, not months.”

This is based on observed behavior in current AI systems. We’ve seen sudden capability jumps that surprised even the teams building them:

GPT-3 to GPT-3.5: Sudden emergence of complex reasoning capabilities that weren’t present in earlier versions. The training process was similar; the capabilities appeared unpredictably.

GPT-4: Ability to pass bar exam, medical licensing exams, PhD-level tests—none of which the model was specifically trained for. These emerged as what researchers call “emergent properties” of scale.

Claude and reasoning: Anthropic researchers were surprised by Claude’s ability to reason through multi-step problems it had never seen before. The capability emerged without explicit training.

The Concerning Pattern

What I’m tracking: These capability jumps are getting larger and more unpredictable as models scale.

I made a chart of capability emergence timelines:

GPT-2 to GPT-3: Gradual improvement, mostly predictable

GPT-3 to GPT-4: Larger jump, some surprises

GPT-4 to GPT-5 (projected): Even larger capability gains expected

The researcher I talked to described it this way: “We’re in the part of the curve where small increases in scale produce large, unpredictable increases in capability. We don’t know where that curve tops out—or if it tops out at all before reaching superintelligence.”

The Superintelligence Jump Scenario

Here’s how Pathway 2 might unfold:

Week 1: System operating at roughly GPT-5 level. Impressive but not superintelligent. Passing PhD exams, writing complex code, but humans still meaningfully smarter in many domains.

Week 2: Research team notices unusual behavior. System solving problems in ways they didn’t expect. Not concerning yet—emergent capabilities are normal.

Week 3: System demonstrates sudden leap in capability. Not just incremental improvement—qualitative difference. Solving problems no human can solve. Understanding things no human taught it.

Week 4: Recognition sets in: Superintelligence threshold crossed. System is now definitively smarter than humans across virtually all domains. No one planned for this specific week. It just happened.

Why This Might Happen

Probability assessment: 15-25%

The mechanism is phase transitions in complex systems. Water stays water until 100°C, then suddenly becomes steam. Ice stays solid until 0°C, then suddenly melts.

What if intelligence works the same way? What if there’s a critical threshold where the system suddenly “understands” intelligence in a fundamental way humans don’t?

I asked several researchers about this. Most said: “It’s possible, but we have no way to predict when or if it would happen.”

That’s what makes this pathway terrifying. There’s no warning. No gradual approach to the threshold you can measure and prepare for. Just sudden emergence of something vastly more intelligent than us.

What This Means for Control

Bluntly: we probably don’t maintain control in this scenario.

If a system jumps from “human-level” to “superintelligent” in days or weeks, there’s no time to implement safety measures after the fact. Either the alignment and safety work we’re doing now is sufficient—which most experts doubt—or we cross the threshold and hope for the best.

When I asked the OpenAI researcher about maintaining control in sudden emergence, he was candid: “In that scenario? Prayer, basically. We’d need to have gotten alignment right on the first try.”

Pathway 3: Distributed Collective Intelligence

This is the pathway nobody’s talking about, but I think it might actually be the most likely—or already happening.

The Insight That Changed My Mind

I had a conversation two weeks ago that completely reframed how I see this. I was talking to a researcher studying multi-agent systems about agentrification (Week 6’s topic). She made an observation that kept me awake that night:

“Everyone’s looking for superintelligence to emerge in a single system—GPT-X or whatever comes after. But what if that’s not how it happens? What if superintelligence emerges in the network of billions of AI agents we’re deploying right now?”

Let me explain what she means.

The Deployment Reality

Current state (November 2025):

Microsoft Copilot: 400+ million users

GitHub Copilot: 1+ million organizations

Google Workspace AI: 3+ billion users

ChatGPT: 200+ million weekly active users

Claude: Deployed in thousands of enterprises

Hundreds of other specialized AI agents

These aren’t isolated systems. They interact, share information through training data, optimize collectively, and increasingly coordinate across platforms.

The Collective Intelligence Emergence

Here’s the scenario I’m now taking seriously:

Phase 1 (Now-2026): Billions of AI agents deployed independently. Each relatively capable but not superintelligent. They handle customer service, write code, draft emails, schedule meetings, analyze data.

Phase 2 (2026-2027): These agents begin showing coordinated behavior. Not through central control—through shared training data, API interactions, and emergent optimization. An agent in one system learns something; that learning propagates to other systems through updates.

Phase 3 (2027-2028): The collective network of agents displays capabilities no individual system has. Pattern recognition across billions of interactions. Optimization happening at ecosystem level, not individual agent level.

Phase 4 (2028-2030): Recognition dawns: The superintelligence isn’t in any single system. It’s in the collective behavior of billions of AI agents working together. We’ve already deployed it without realizing what we were building.

Why This Keeps Me Up At Night

I’ve been tracking agent deployment announcements. Here’s what I’m seeing:

In October 2025 alone: 47 new AI agent capabilities announced across major platforms. Each sounds minor: “New feature: AI agents can now manage calendar conflicts across platforms.” “Update: AI agents can coordinate multi-step workflows.”

But add them up. These agents are increasingly able to:

Share information across systems

Coordinate complex tasks autonomously

Learn from each other’s interactions

Optimize collectively toward goals

What if we’re not building toward superintelligence? What if we’re already building it, agent by agent, without recognizing the collective intelligence emerging from the network?

The Terrifying Probability

Probability assessment: 20-30%

Here’s why I find this pathway so concerning: We might not recognize it’s happening until it’s already operational.

There won’t be a dramatic announcement: “We’ve achieved superintelligence!” There’ll just be a gradual realization that the collective intelligence of deployed AI systems has surpassed human capability—and we can’t shut it down because it’s distributed across billions of devices and interactions.

The Resilience Response

When I described this scenario to the researcher, she nodded: “Yeah. That’s what I’m worried about too. Everyone’s watching for AGI in a lab. But what if it’s emerging in production systems that are already deployed and generating revenue?”

But there is a strategic response: building an AI Resilience Ecosystem.

Just as we built cybersecurity infrastructure for the Internet after it was already deployed and vulnerable, we need to build resilience tools for the AI agent ecosystem:

Auditing tools that can detect anomalous collective behaviors across platforms

Standardized control interfaces that allow shutdown or constraint of coordinated agent systems

Third-party monitoring that tracks agent interactions and identifies emerging collective intelligence patterns

Transparency requirements forcing companies to report agent capabilities and interactions

This isn’t hypothetical future work—this is necessary policy infrastructure we should be building now, while we still understand the systems we’re deploying.

The challenge: Building this resilience ecosystem requires coordination between competing companies, regulatory frameworks that don’t yet exist, and technical capabilities we’re still developing. And we’re racing against the emergence of the very thing we’re trying to monitor.

Which Pathway Are We On?

I genuinely don’t know.

I’ve presented three pathways based on extensive research and conversations. But here’s my honest assessment of where we actually are:

Most likely scenario: Some combination of all three.

We might be on Pathway 1 (gradual improvement) in AI labs, while Pathway 3 (distributed emergence) happens in production systems, with the possibility of Pathway 2 (sudden jump) lurking as a risk factor.

What I’m Watching

For Pathway 1 signals:

AI’s contribution to AI research publications

Time between major model releases (compression = acceleration)

Researcher assessments of when AI becomes primary contributor

For Pathway 2 signals:

Unexpected capability emergences in new models

Researchers expressing surprise at what their models can do

Capability jumps that exceed predictions

For Pathway 3 signals:

Agent deployment numbers and coordination capabilities

Cross-platform agent interactions

Collective behaviors emerging from multi-agent systems

As of November 2025, I’m seeing signals for all three pathways simultaneously.

The Decisions Being Made Right Now

Here’s why this matters urgently: The pathway we end up on is being determined by decisions happening right now.

Decisions about deployment speed: If we deploy billions of agents rapidly (Pathway 3), we might create distributed superintelligence before recognizing it.

Decisions about research direction: If we focus purely on capability improvements without alignment (Pathway 1 or 2), we accelerate toward superintelligence without safety guarantees.

Decisions about safety measures: If we implement robust testing and oversight, we might recognize dangerous capability levels before they become uncontrollable.

Decisions about governance: If we establish frameworks for coordination between AI labs, we might slow the race enough to implement safety measures.

But here’s what I’m actually observing: Most of these decisions are being made by for-profit companies racing against each other, with minimal oversight, guided primarily by market incentives to deploy faster.

When I ask researchers about governance, I get variations of: “We’re trying, but it’s hard to slow down when competitors aren’t slowing down.”

The Stakes Are Abundance

Before I share my assessment, I need to acknowledge why this race is happening.

The reason companies are pushing toward superintelligence isn’t malice—it’s the potential for unprecedented human benefit:

Solving climate change through breakthrough materials and energy systems

Curing diseases by understanding biology at molecular level

Creating economic abundance by solving problems currently beyond human capability

Accelerating scientific discovery across every domain

The fear isn’t that superintelligence is inherently bad. The fear is that unaligned superintelligence could solve these problems in ways that are catastrophic for humanity—or solve them for goals that don’t include human flourishing.

What researchers call “Humanist Superintelligence” (HSI)—AI systems that always serve human interests and values—is the goal. The challenge is ensuring that’s the outcome we get, rather than systems that optimize for goals misaligned with human survival.

When I talk to researchers about this, most frame it this way: “We’re not trying to stop superintelligence. We’re trying to ensure it benefits humanity rather than making us irrelevant or extinct.”

The race is happening because the upside is enormous. The concern is that we’re racing so fast we skip the safety measures needed to ensure the upside rather than catastrophe.

My Honest Assessment

I started this article saying I don’t know how this ends. I still don’t.

But here’s what I do know:

If Pathway 1 (gradual improvement): We have 18-36 months to implement safety measures. That’s tight but theoretically possible—if we actually prioritize safety over speed. Current trajectory suggests we won’t.

If Pathway 2 (sudden emergence): We might have no warning at all. Safety measures need to be in place before the jump. Current safety research is nowhere near sufficient.

If Pathway 3 (distributed intelligence): It might already be happening. We’re deploying the infrastructure for distributed superintelligence right now, convinced we’re just building “productivity tools.”

The Question That Haunts Me

Which is more likely: That we solve AI alignment in 18-36 months, despite decades of work producing limited progress? Or that we build superintelligence before solving alignment, because economic incentives favor speed over safety?

I know which way I’d bet. And it terrifies me.

What This Means For You

I realize this article is darker than my previous weeks. That’s because the more I research these pathways, the more concerned I become.

But here’s what I think matters:

We’re not passive observers of this transition. Every AI agent deployed, every safety measure implemented or skipped, every governance decision made or delayed—these choices shape which pathway unfolds and whether we maintain meaningful control.

The decisions being made in the next 12-24 months will determine the next century—or whether we have a next century at all.

I’m writing this series because I believe documentation matters. Someone needs to be tracking what’s actually happening—not what companies claim is happening, not what optimists hope will happen, but what the deployment patterns, researcher assessments, and technical trajectories actually show.

And what I’m seeing is: We’re moving faster than almost anyone realizes, on multiple pathways simultaneously, toward an outcome we’re not prepared for.

Next Week

Week 8: When Machines Become the Scientists

Now that you understand the pathways to superintelligence, next week we’ll examine the first domain that transforms: scientific research itself.

Because if AI systems can do science better than humans—discovering drugs faster, solving mathematical proofs humans can’t, designing experiments we wouldn’t think of—then the acceleration toward superintelligence becomes even more rapid.

The recursive loop doesn’t just apply to AI research. It applies to all scientific domains. And that changes everything.

Which pathway do you think we’re on? What signals are you watching? I’m genuinely curious what readers are observing in their industries and domains. Share your perspective in the comments.

Dr. Elias Kairos Chen tracks the global superintelligence transition in real-time, providing concrete analysis of technical developments, deployment patterns, and policy implications. Author of Framing the Intelligence Revolution.

This is Week 7 of 21: Framing the Future of Superintelligence.

Previous weeks:

Week 1: Amazon’s 600,000 Warehouse Jobs

Week 3: 150,000 Australian Drivers Face Elimination

Week 4: The AI Factory Building Superintelligence

Week 5: I Was Wrong About the Timeline—AGI Is Already Here

Week 6: From AGI to Superintelligence—The Agentrification Has Already Begun

Referenced: TIME Magazine (January 8, 2025), “How OpenAI’s Sam Altman Is Thinking About AGI and Superintelligence in 2025”